What Is Seedance 2.0: ByteDance’s AI Video Model and Its Commercial Impact

What Is Seedance 2.0 is a next-generation AI video system developed by ByteDance to produce cinematic, production-ready video at scale. Unlike earlier generative tools focused on short demos, Seedance 2.0 is designed to support real commercial workflows across advertising, entertainment, and digital commerce.

China’s AI video generation race has accelerated rapidly as platforms compete to deliver higher realism, longer temporal consistency, and more controllable outputs. At the same time, cinematic AI video generation has shifted from a novelty into a business requirement, especially for short drama, brand storytelling, and platform-native content. In this article, we’ll break down Seedance 2.0’s technology, use cases, competition, and market impact.

Key Takeaways

- What Is Seedance 2.0 marks a shift in AI video generation from experimental demos to production-ready systems. It focuses on cinematic quality and commercial scalability.

- The Seedance 2.0 AI video model differs from earlier tools by delivering more stable, repeatable outputs. This makes it suitable for real-world advertising and media workflows.

- AI infrastructure growth is now investable through tokenized RWAs rather than direct platform exposure. Bitget Wallet enables access to AI-related RWAs alongside stablecoins and cross-chain assets.

What Is Seedance 2.0 and How Does It Advance AI Video Generation?

Seedance 2.0 is ByteDance’s latest AI video model, developed to produce cinematic, production-ready video with greater temporal coherence than earlier generation tools. The system is positioned for commercial deployment, addressing limitations in visual stability and scene consistency that have constrained prior AI video generation models.

By emphasizing repeatable output quality and longer narrative continuity, Seedance 2.0 advances AI video generation from short, experimental clips toward scalable use in advertising, serialized content, and platform-native media.

How Is Seedance 2.0 Different From Traditional AI Video Tools?

Seedance 2.0 departs from earlier AI video tools by prioritizing consistency and reliability over short-form visual novelty. Rather than optimizing for brief demonstrations, the model is designed to sustain visual quality and narrative coherence across longer video sequences.

- Longer temporal coherence across multiple scenes

- Fewer visual artifacts during motion and transitions

- Optimized for commercial deployment rather than experimental demos

Why Multimodal Video Generation Is Central to Seedance 2.0?

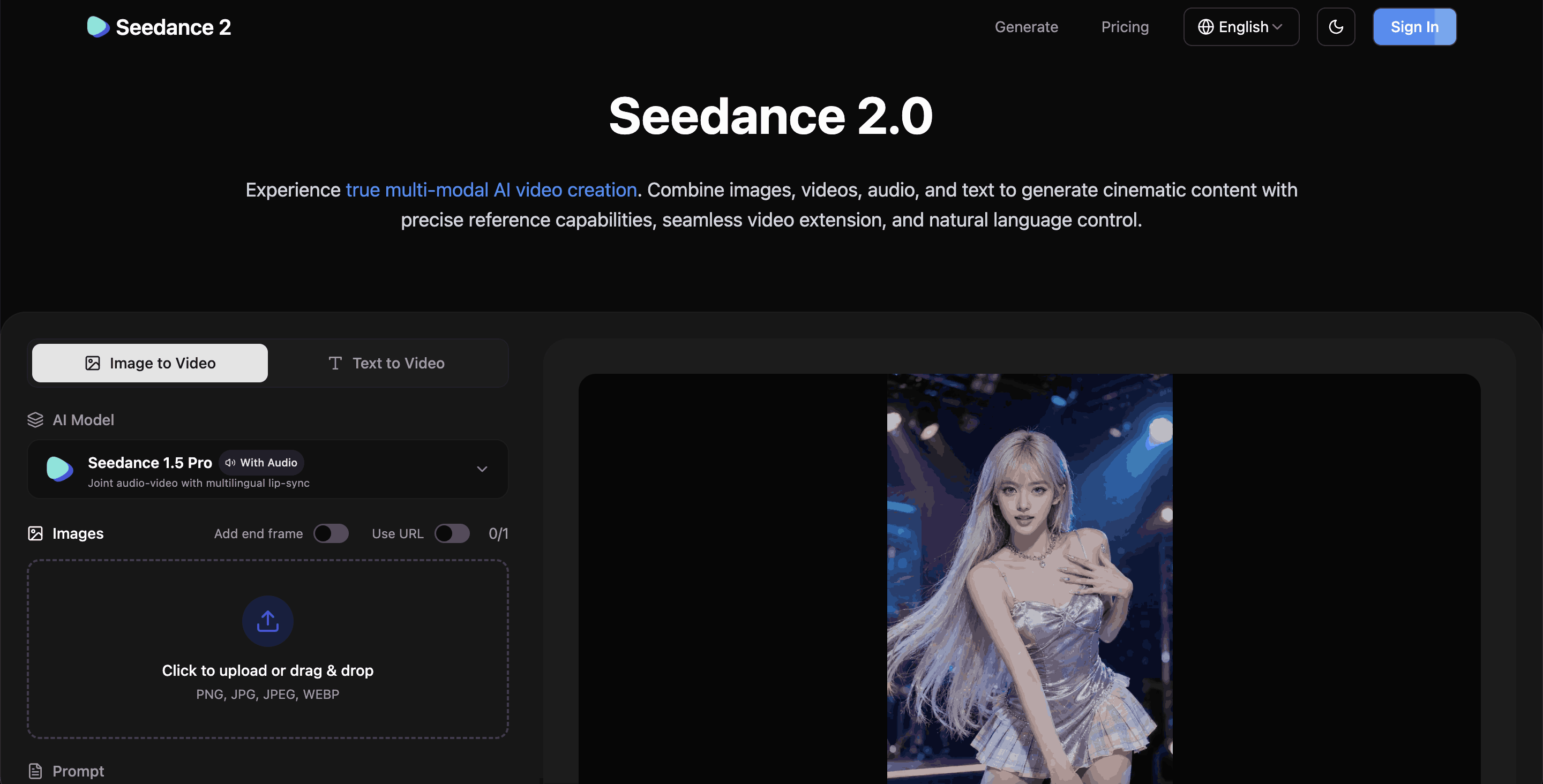

Multimodal video generation is a core design principle behind Seedance 2.0, enabling tighter control over how scenes are composed and evolve over time. By integrating multiple input types, the system supports more deliberate storytelling and production-oriented workflows.

- Combines text, image, and motion conditioning

- Enables scene-level storytelling control

- Aligns with short drama and advertising formats

Source: Seedance 2.0

How Does the Seedance 2.0 AI Video Model Work Technically?

Seedance 2.0 uses a multimodal video generation architecture that combines text and visual inputs within a single pipeline, enabling the AI video model to maintain consistent motion, character appearance, and camera framing across scenes while improving temporal coherence and production stability.

Input Modes Supported by Seedance 2.0

The model supports multiple input modes to give creators greater control over scene composition and narrative flow, depending on production needs.

- Text-to-video prompts

- Image-to-video conditioning

- Hybrid prompt stacks combining both

Cinematic AI Video Generation Capabilities of Seedance 2.0

Seedance 2.0 is designed to replicate elements of film production, allowing AI-generated video to meet cinematic and commercial quality standards.

- Simulated camera movement and framing

- Character persistence across shots

- Film-grade lighting, depth, and composition

Why Is ByteDance Investing Heavily in AI Video Models?

ByteDance’s investment in AI video models reflects a broader effort to strengthen platform efficiency, content supply, and long-term monetization.

ByteDance operates one of the world’s largest short-form video ecosystems, where sustained growth depends on a constant flow of engaging visual content. AI video generation offers a way to expand content production capacity while reducing reliance on traditional filming workflows.

ByteDance’s Platform Advantage in AI Video Generation

ByteDance enters the AI video race with structural advantages that most standalone AI labs lack, particularly in distribution and monetization.

- Built-in short-form video distribution at scale

- Strong, existing demand from advertisers and creators

- Native monetization through advertising and commerce integrations

How the ByteDance AI Video Model Fits Its Long-Term Strategy

From a strategic perspective, the ByteDance AI video model is designed to improve cost efficiency while reinforcing platform dominance.

- Lowering content production costs across the ecosystem

- Enabling scalable creative output for brands and platforms

- Building a competitive moat against Western AI video models

Source: ByteDance

Seedance 2.0 Commercial Use Cases: Who Is It Built For?

Seedance 2.0 commercial use cases are centered on industries that need scalable, repeatable video production rather than experimental output.

Seedance 2.0 is positioned for commercial environments where visual consistency, speed, and cost efficiency are critical. Its design reflects the needs of sectors that rely on high-volume video content and structured production workflows.

Advertising and Brand Storytelling

In advertising, Seedance 2.0 supports the rapid creation of brand-aligned video content that can be deployed across multiple channels without traditional production constraints. By enabling AI-generated creatives produced at scale, the model allows brands to conduct faster A/B testing of visual narratives and messaging, reducing campaign iteration time while maintaining visual consistency.

Short Drama and Serialized Content

For entertainment platforms, particularly short-form and episodic drama, Seedance 2.0 enables more efficient content pipelines by reducing dependence on physical sets and production crews. Through episodic AI video generation workflows, studios can maintain narrative continuity across releases while achieving lower production and iteration costs, which is critical for serialized content formats.

E-Commerce and Product Visualization

In e-commerce, the model is used to generate dynamic product videos that can be adapted to different markets and consumer segments. Automated product demonstrations combined with localization across regions, languages, and platforms allow brands and marketplaces to scale visual merchandising without increasing operational complexity.

Seedance 2.0 vs Sora: How Do They Compare in Cinematic AI Video Generation?

Seedance 2.0 vs Sora reflects two different approaches to cinematic AI video generation, shaped by how each model balances visual quality, creative control, and deployment at scale. The comparison highlights how design priorities influence consistency, production usability, and suitability for commercial environments.

Output Quality and Motion Control

The most visible differences between Seedance 2.0 and Sora emerge in how motion, continuity, and visual stability are handled across scenes. These technical choices determine whether a model is better suited for extended narratives or short, visually striking demonstrations.

- Seedance 2.0: Emphasizes stable, repeatable motion designed to hold up across longer video sequences, with stronger scene-to-scene consistency.

- Sora: Prioritizes expressive, high-impact visuals that showcase creative range, sometimes at the expense of temporal coherence.

- Key difference: Reliability and consistency versus visual experimentation.

Commercial Readiness vs Experimental Focus

Beyond visual output, the two models diverge in how they are positioned for real-world deployment. Their contrasting design goals reflect different assumptions about scale, predictability, and production use.

- Seedance 2.0: Optimized for production pipelines where scalability, predictability, and operational stability are required.

- Sora: Positioned as a frontier research showcase, highlighting what is creatively possible rather than what is immediately deployable.

- Key difference: Production-first architecture versus experimental exploration.

Platform Integration Differences

Platform integration further separates Seedance 2.0 and Sora, shaping how each model is distributed and monetized. These structural differences have long-term implications for commercial adoption.

- Seedance 2.0: Embedded within ByteDance’s ecosystem, enabling native distribution, data feedback loops, and monetization through advertising and content platforms.

- Sora: Operates as a standalone interface with fewer direct platform dependencies.

- Key difference: Integrated platform leverage versus independent model access.

Source: Sora

Seedance 2.0 vs Kling: Key Differences Between China’s Leading AI Video Models

The comparison between Seedance 2.0 vs Kling highlights how China’s leading AI video models diverge in design priorities, user focus, and scalability within a rapidly evolving AI video ecosystem.

1. Creative Flexibility vs Production Stability

The primary distinction between Seedance 2.0 and Kling lies in how each model balances creative freedom against production reliability. Kling is oriented toward stylistic experimentation, making it attractive for exploratory or artistic use cases, while Seedance 2.0 is designed to deliver consistent, repeatable output suitable for commercial deployment.

The table below summarizes how this difference affects output quality, enterprise readiness, and ideal use cases:

| Dimension | Seedance 2.0 | Kling |

| Creative style | Controlled, cinematic | Highly experimental |

| Output consistency | High | Variable |

| Enterprise suitability | Strong | Limited |

| Ideal use case | Commercial production | Creative exploration |

In practice, this means Seedance 2.0 favors reliability and visual continuity, whereas Kling prioritizes flexibility even if results vary between generations.

2. Target Users: Creators vs Enterprises

Another key difference in the Seedance 2.0 vs Kling comparison is the audience each model is built to serve. Kling primarily targets individual creators and small studios seeking rapid experimentation, while Seedance 2.0 is positioned for advertisers, platforms, and enterprise teams operating at scale.

The following comparison outlines how user focus influences workflow complexity and collaboration support:

| Dimension | Seedance 2.0 | Kling |

| Primary users | Enterprises | Creators |

| Workflow complexity | Structured | Lightweight |

| Team collaboration | Supported | Limited |

This distinction affects adoption paths, with Seedance 2.0 aligning more closely with structured production environments and Kling favoring flexible, creator-led workflows.

3. Speed of Deployment and Scaling

Scalability further separates the two AI video models. Seedance 2.0 benefits from platform-level rollout through ByteDance’s ecosystem, enabling faster deployment across large user bases. Kling, while capable of rapid iteration, faces constraints when scaling beyond individual or small-team use.

The table below compares their readiness for mass adoption and infrastructure maturity:

| Dimension | Seedance 2.0 | Kling |

| Scalability | Mass adoption | Limited |

| Infrastructure | Enterprise-grade | Creator-grade |

As China’s AI video market matures, these differences suggest Seedance 2.0 is better positioned for long-term platform integration, while Kling remains more suited to experimental and niche creative applications.

What Does Seedance 2.0 Signal About AI Video Models in China?

Seedance 2.0 offers insight into how AI video models in China are evolving in response to commercial demand, platform integration, and regulatory structure, rather than purely technical experimentation.

China’s Focus on Cinematic AI Video Generation

Seedance 2.0 reflects a broader industry shift toward cinematic AI video generation that prioritizes production quality and narrative control. This approach suggests that AI video models in China are increasingly judged by their ability to support real commercial workflows, not just visual novelty.

- Film-grade realism over novelty

- Alignment with commerce and entertainment

Regulatory and Platform-Driven Innovation

The model also highlights how regulatory expectations and platform ownership influence AI development in China. By embedding AI video generation directly into large platforms, companies can move faster from testing to deployment while maintaining compliance.

- Platform-led compliance

- Faster commercialization

Global Implications for AI Competition

At a global level, Seedance 2.0 signals a divergence in how AI video models are developed and deployed across regions. China’s platform-centric approach increases competitive pressure on global platforms that rely on standalone AI models without integrated distribution.

- Diverging AI strategies

- Increased pressure on global platforms

How Can Users Gain Exposure to AI Growth via Bitget Wallet RWA Features?

As AI infrastructure becomes a major driver of market growth, tokenized real-world assets provide an alternative way to gain exposure beyond traditional equity markets. RWA structures allow AI-related assets to be accessed on-chain while remaining tied to regulated underlying instruments.

What Are Tokenized AI Stocks and RWAs?

Tokenized AI stocks and RWAs represent traditional AI-related equities in an on-chain format, allowing users to interact with them through blockchain-based infrastructure. This approach enables regulated exposure to AI companies without relying solely on conventional brokerage accounts.

- AI equities represented on-chain

- Regulated exposure without brokers

Why Bitget Wallet Matters for AI-Related RWAs?

Bitget Wallet provides a unified environment for managing tokenized traditional assets alongside crypto-native holdings, reducing friction between Web2 and Web3 markets.

- Zero-fee trading on RWA U.S. stock tokens

- Unified access alongside crypto assets

- One interface for AI and traditional exposure

Bitget Wallet enables users to access AI-related stocks via RWA tokens while managing stablecoins and cross-chain assets in one place.

Conclusion

What Is Seedance 2.0 ultimately represents a shift from experimental AI video generation toward scalable, cinematic, and commercially viable systems. By comparing Seedance 2.0 with Sora and Kling, it becomes clear that ByteDance is prioritizing production stability, platform integration, and real-world use cases.

Zero-fee trading on RWA U.S. stock tokens allows users to explore AI-related RWA opportunities and manage AI stocks, stablecoins, and cross-chain assets in one place. Download Bitget Wallet to access tokenized AI exposure through a beginner-friendly, non-custodial interface.

Sign up Bitget Wallet now - grab your $2 bonus!

FAQs

1. What Is Seedance 2.0?

What Is Seedance 2.0 is ByteDance’s cinematic AI video model built for commercial video production rather than experimental demos. It is designed to generate high-fidelity video with stronger temporal coherence and production stability.

2. What is Seedance 2.0 used for?

Seedance 2.0 is used for advertising, short drama, and e-commerce video generation where consistent visual quality and scalability are required. These use cases reflect its focus on real-world commercial deployment.

3. How is Seedance 2.0 different from Sora and Kling?

Seedance 2.0 differs from Sora and Kling by prioritizing production stability, platform integration, and repeatable outputs. In contrast, Sora and Kling emphasize experimental capabilities and creative exploration over large-scale commercial use.

4. Can investors benefit from AI video growth indirectly?

Yes, investors can benefit indirectly from AI video growth through AI-related equities and tokenized RWAs. Platforms such as Bitget Wallet provide access to RWA U.S. stock tokens, enabling exposure to AI-sector growth through on-chain infrastructure.

Risk Disclosure

Please be aware that cryptocurrency trading involves high market risk. Bitget Wallet is not responsible for any trading losses incurred. Always perform your own research and trade responsibly.

- What Is a Multi-Chain Wallet?2026-02-13 | 5 mins